|

Geodetic observatory Pecný

|

|

EUREF data center - Implementation

Article Index

New data center implementation (2002)

In 2002, the data center was completely re-implemented and becamed the official EUREF Permanent Network (EPN) data center.

The main goals to reimplement the data center consisted were:

- to encrease transfer efficiency (a non-redundant traffic loading)

- to decrease storage redundancy

- to encrease the robustness in a single source outage

- to enable easy setup and configuration

- to protect or to decrease low data latency of the hourly files

- to enable dynamic adaptation for the source priority

The software package developed to operate GOP data center in principle works in three steps:

The software package developed to operate GOP data center in principle works in three steps:

- checking the local data content,

- providing data transfer,

- converting the data.

The software has been written in perl and consists of the following features:

- high modularity

- operating in threads

- geting data by: incoming, downloading, mirroring, unique mirroring

- integrates special procedures for data type (RINEXO, SP3, etc.)

- data concatenation from hourly to daily, from station to global

- monitoring data quality and a archive

- effective and simple configuration

- supporting different projects (anonymous data, password protected data)

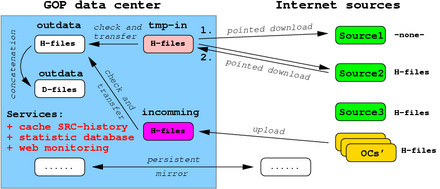

New implementation uses strictly non-redundant data transfer and storage. About 2/3 of the data storage capacity and the traffic loading was spared compared to the previous implementation. Thanks to the modularity, the data fetching, conversion and storage is separated. Different sources (incomming, download, mirror etc) can be simply integrated and since 2004, hourly files from real-time data streams has been implemented. A cascade of collecting procedure thus co-exists, e.g. if real-time data are not available, standard download procedure follows.

New implementation uses strictly non-redundant data transfer and storage. About 2/3 of the data storage capacity and the traffic loading was spared compared to the previous implementation. Thanks to the modularity, the data fetching, conversion and storage is separated. Different sources (incomming, download, mirror etc) can be simply integrated and since 2004, hourly files from real-time data streams has been implemented. A cascade of collecting procedure thus co-exists, e.g. if real-time data are not available, standard download procedure follows.

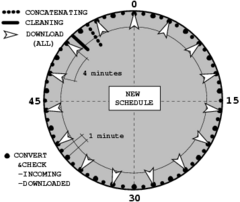

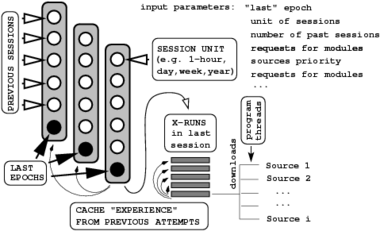

Every download process is running in a special thread for the data grouped for every single source. The threads are given a limited interval after which the process is stopped. Unfinished data can be completed in a next run, because it is preceeded by a local data center content checking. Separating the download from the data convertion, both processes could be scheduled in different intervals. Downloads are thus started every 4 minutes while converisons every 1 minute for all completed data.